Generative AI models are a type of artificial intelligence algorithm designed to generate new data that resembles a specific type or distribution of data. These models have the ability to create new content, such as images, text, music, and even videos, that can be remarkably realistic and indistinguishable from human-generated content.

Generative AI models are often built using deep learning techniques, particularly generative adversarial networks (GANs) and variational autoencoders (VAEs). These models are trained on large datasets and learn to capture the underlying patterns and structures of the data, allowing them to generate new samples that exhibit similar characteristics.

Generative AI models have gained significant attention and popularity due to their remarkable ability to create realistic and creative content. They have been used in various fields, including art, design, entertainment, and even scientific research. They can generate realistic images, write stories and articles, compose music, and even assist in drug discovery and protein folding simulations.

However, these models also raise important ethical concerns, such as the potential for generating fake content, spreading misinformation, or infringing on intellectual property rights. As the capabilities of generative AI models continue to advance, it is crucial to ensure responsible and ethical use to mitigate these risks.

What is Transfer Learning in Generative AI Models?

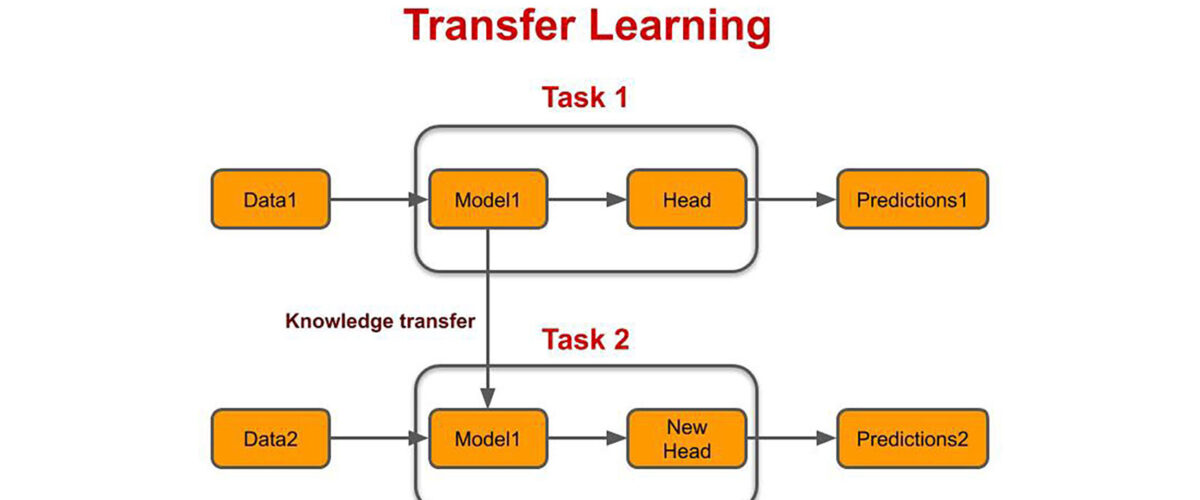

Transfer learning in generative AI models refers to the practice of leveraging pre-trained models on one task or dataset to improve performance on a different but related task or dataset. It involves using the knowledge learned from one domain and applying it to another domain, thereby transferring the learned features, representations, or weights to a new model or task.

In the context of generative AI models, transfer learning can be particularly useful when working with limited data or when trying to generate content in a new domain. Instead of training a generative model from scratch, which may require a large amount of labeled data and computational resources, transfer learning allows us to utilize the knowledge and representations learned from pre-existing models.

One common approach to transfer learning in generative AI is fine-tuning. In this approach, a pre-trained model, such as a GAN or VAE, is taken, and its weights and parameters are adjusted or fine-tuned using a smaller dataset specific to the target domain. By fine-tuning the model, it adapts its learned representations to the new data, allowing it to generate content that is more relevant to the target domain.

Transfer learning in generative AI models has several benefits. It enables faster training by initializing the model with pre-trained weights, which captures a lot of prior knowledge. It can improve model performance, especially in cases where the target dataset is limited, by leveraging the generalization capabilities of the pre-trained model. It also helps in cases where the target domain lacks sufficient labeled data, as the pre-trained model already possesses knowledge learned from a different but related domain.

Additionally, transfer learning can facilitate the transfer of style, content, or other characteristics from one dataset to another. For example, a generative model trained on a large dataset of landscape photographs can be fine-tuned with a smaller dataset of specific cities, enabling it to generate realistic images of those cities while preserving the general style and quality of the original dataset.

However, it is important to note that transfer learning in generative AI models also has its limitations. The effectiveness of transfer learning depends on the similarity between the pre-trained and target domains. If the domains are significantly different, the transferred knowledge may not be as beneficial. Careful consideration should be given to selecting appropriate pre-trained models and ensuring that the transfer aligns with the specific requirements of the target task or dataset.

In summary, transfer learning in generative AI models allows us to leverage pre-trained models and transfer their learned knowledge to improve performance on new tasks or datasets. It provides a powerful technique to accelerate training, enhance model performance, and enable the generation of content in different domains with limited data.

Benefits of Transfer Learning in Generative AI Models

Transfer learning in generative AI models offers several significant benefits, including:

- Faster Training: Transfer learning allows models to initialize with pre-trained weights, which captures prior knowledge learned from a large dataset. By starting from a good initialization point, the model can converge faster during training, saving time and computational resources.

- Improved Performance: Pre-trained models have already learned useful features and representations from a large dataset, making them effective in capturing relevant patterns and structures. By transferring this knowledge to a new task or dataset, the generative AI model can benefit from the generalization capabilities of the pre-trained model, resulting in improved performance, even with limited data.

- Overcoming Data Limitations: Generating high-quality content often requires a large amount of labeled data, which may not be readily available for a specific task or domain. Transfer learning allows the model to leverage knowledge from a related domain where more data is available. This way, even with limited data, the generative AI model can produce high-quality output.

- Knowledge Transfer Between Domains: Transfer learning facilitates the transfer of knowledge, style, or content from one dataset to another. It enables the model to inherit features, characteristics, or artistic styles from the pre-trained model and apply them to generate content in a different domain. For example, a model trained on painting styles can be fine-tuned to generate artwork in a specific artist’s style.

- Adaptability to New Tasks: Transfer learning enables the application of pre-trained models to various tasks within the same domain. Once a model is trained on a large dataset, it can be fine-tuned for specific tasks such as image translation, style transfer, or text generation, without requiring extensive training from scratch. This adaptability allows for rapid experimentation and exploration of different generative tasks.

- Reduced Computational Requirements: Training generative AI models from scratch can be computationally intensive and time-consuming, particularly when dealing with complex architectures or large datasets. By utilizing pre-trained models, transfer learning reduces the computational requirements by leveraging the pre-existing knowledge, making it more efficient and accessible.

Overall, transfer learning in generative AI models empowers researchers and practitioners to build better-performing models, address data limitations, and achieve desired results more quickly and effectively. It leverages existing knowledge, accelerates training, and enhances the overall capabilities of generative AI systems.

Conclusion

In conclusion, transfer learning in generative AI models is a powerful technique that allows the knowledge and representations learned from pre-trained models to be applied to new tasks or domains. It offers several benefits, including faster training, improved performance, overcoming data limitations, knowledge transfer between domains, adaptability to new tasks, and reduced computational requirements.

By leveraging pre-existing models, generative AI systems can initialize with learned weights, accelerating training and converging to better solutions. The transfer of knowledge from pre-trained models enhances the performance of generative models, even with limited data, by capturing relevant patterns and structures from the original dataset.

Transfer learning also enables the application of pre-trained models to new domains, allowing the model to inherit characteristics, styles, or artistic features. This facilitates the generation of content that aligns with specific requirements or desired styles.

Furthermore, transfer learning in generative AI models reduces the computational burden associated with training models from scratch. By leveraging pre-trained models, computational resources are saved, making generative AI more accessible and efficient.

However, it is important to carefully consider the similarity between the pre-trained and target domains to ensure effective transfer of knowledge. In some cases, transfer learning may not provide significant benefits if the domains are too dissimilar.

In summary, understanding transfer learning in generative AI models provides practitioners with a powerful tool to enhance model performance, overcome data limitations, and leverage pre-existing knowledge for faster and more efficient training. By harnessing the benefits of transfer learning, generative AI models can generate high-quality content and adapt to new tasks or domains with greater ease.

Post a Comment